Seth Z. Zhao

I am a second-year Computer Science PhD student at UCLA, advised by Professor Bolei Zhou and Professor Jiaqi Ma.

Previously, I received my M.S. and B.A. in Computer Science at UC Berkeley where I was fortunate to work with Professor Masayoshi Tomizuka, Professor Allen Yang, and Professor Constance Chang-Hasnain. I was also a research intern at Qualcomm and Honda Research Institute (HRI) before.

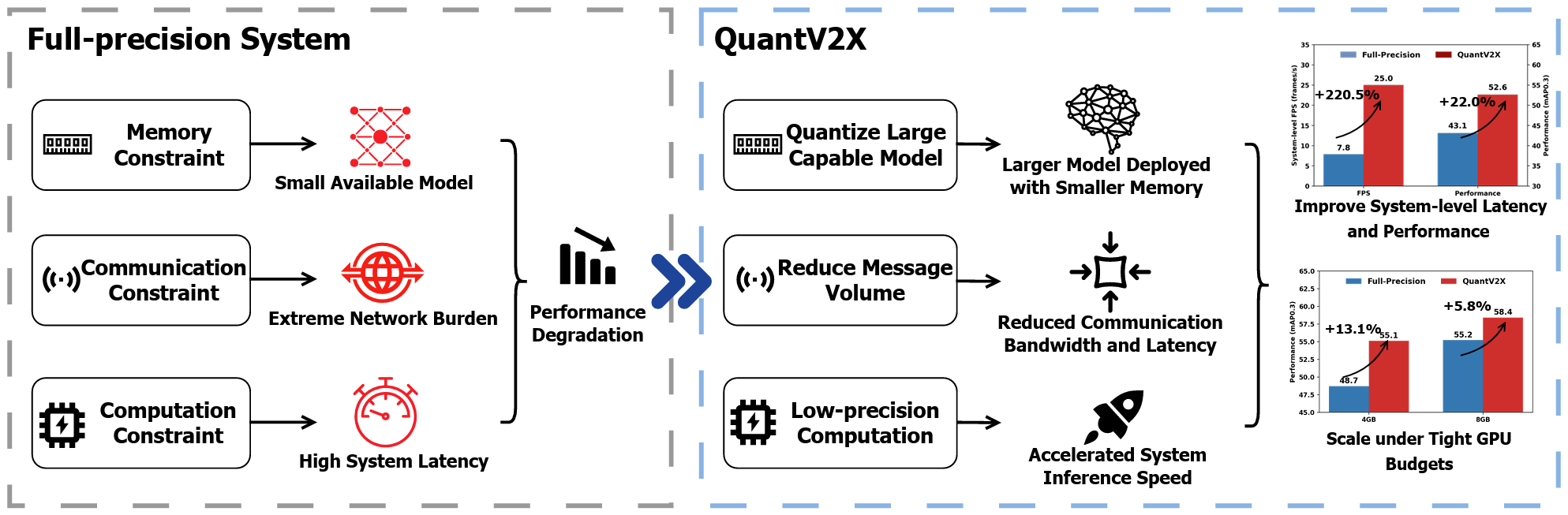

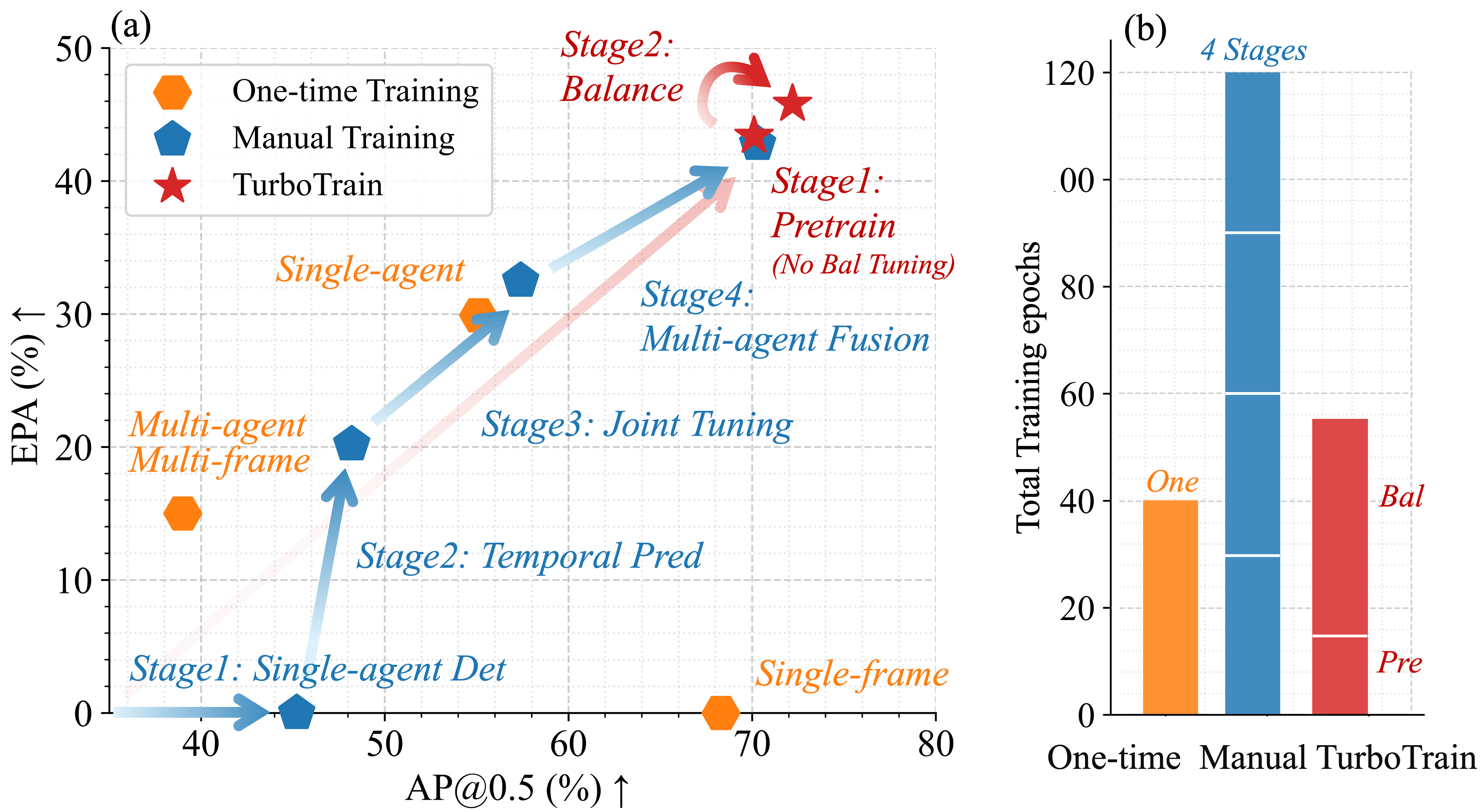

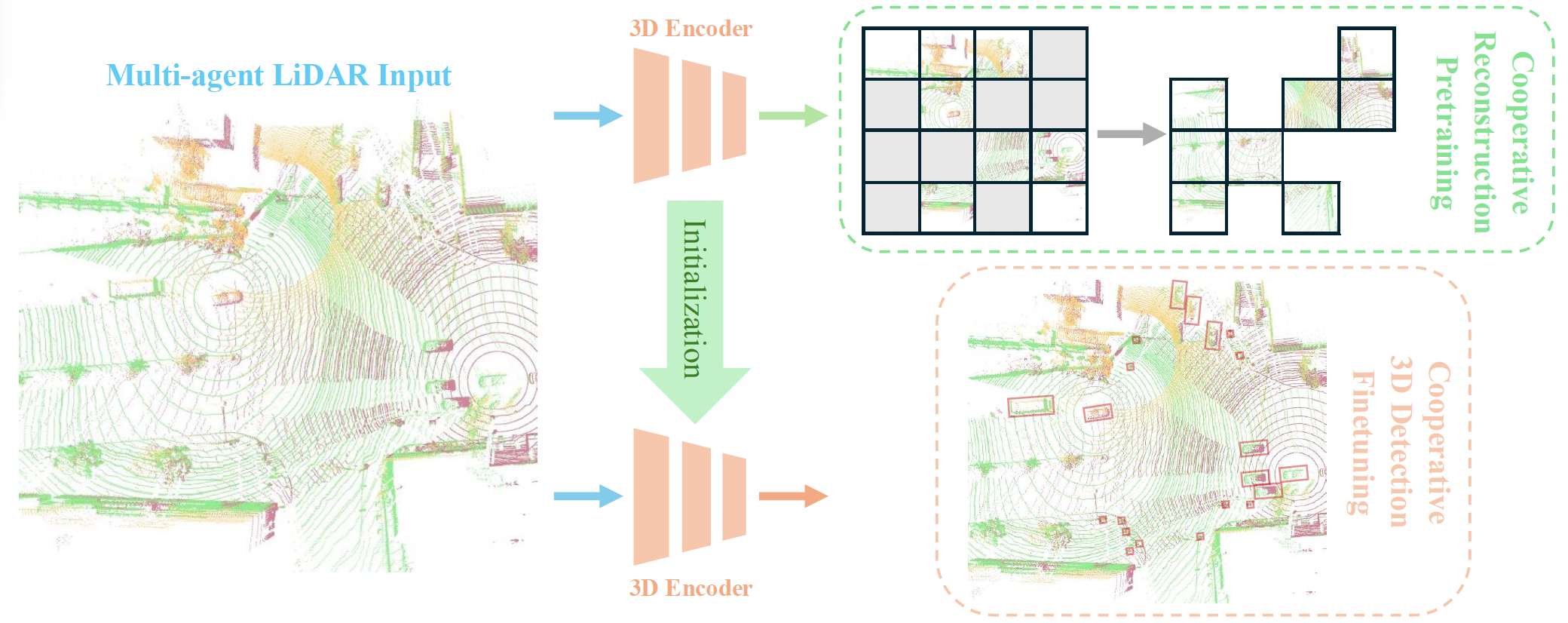

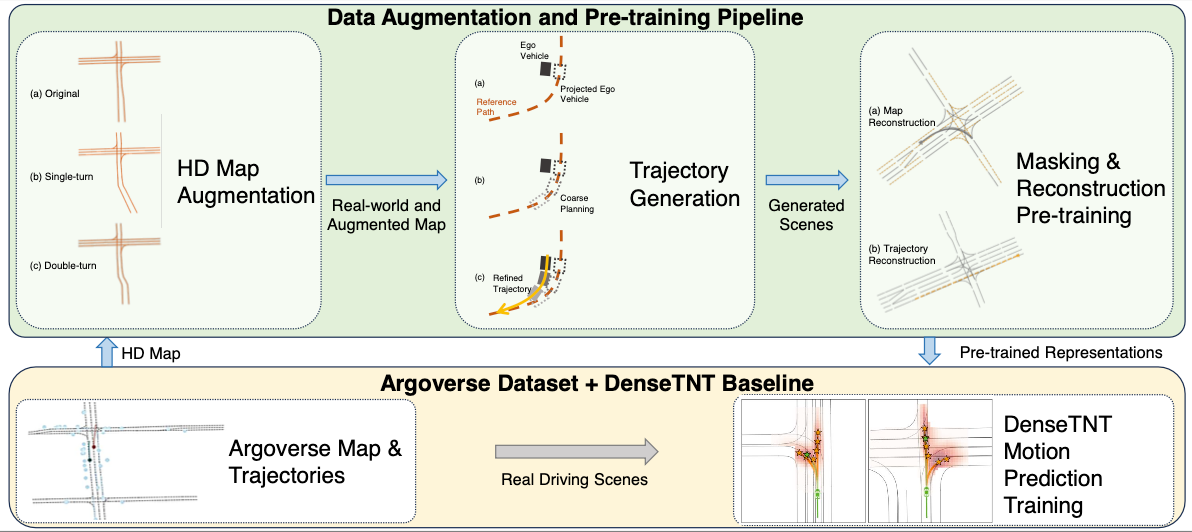

My current research interest focuses on End-to-End Autonomous Driving, Cooperative Driving, and World Modeling.